| id | position | condition | rt |

|---|---|---|---|

| 101 | sitting | incongruent | 929.0741 |

| 101 | sitting | congruent | 888.3871 |

| 102 | sitting | incongruent | 988.1818 |

| 102 | sitting | congruent | 888.6176 |

| 103 | sitting | incongruent | 945.2174 |

| 103 | sitting | congruent | 842.6970 |

| 104 | sitting | incongruent | 913.6667 |

| 104 | sitting | congruent | 735.3125 |

| 105 | sitting | incongruent | 935.3125 |

| 105 | sitting | congruent | 818.9706 |

| 106 | sitting | incongruent | 867.1250 |

| 106 | sitting | congruent | 814.7429 |

| 107 | sitting | incongruent | 936.4000 |

| 107 | sitting | congruent | 785.2857 |

| 108 | sitting | incongruent | 764.7879 |

| 108 | sitting | congruent | 693.9429 |

| 109 | sitting | incongruent | 985.7308 |

| 109 | sitting | congruent | 961.8000 |

| 110 | sitting | incongruent | 976.2800 |

| 110 | sitting | congruent | 830.9355 |

| 112 | sitting | incongruent | 780.5588 |

| 112 | sitting | congruent | 706.3056 |

| 114 | sitting | incongruent | 950.6667 |

| 114 | sitting | congruent | 752.6471 |

| 115 | sitting | incongruent | 865.3000 |

| 115 | sitting | congruent | 702.5294 |

| 116 | sitting | incongruent | 734.6857 |

| 116 | sitting | congruent | 607.9167 |

| 117 | sitting | incongruent | 896.2258 |

| 117 | sitting | congruent | 815.1471 |

| 118 | sitting | incongruent | 854.0345 |

| 118 | sitting | congruent | 715.6875 |

| 119 | sitting | incongruent | 854.0833 |

| 119 | sitting | congruent | 797.0000 |

| 201 | standing | incongruent | 848.9412 |

| 201 | standing | congruent | 786.0571 |

| 202 | standing | incongruent | 930.9259 |

| 202 | standing | congruent | 933.1562 |

| 203 | standing | incongruent | 860.8182 |

| 203 | standing | congruent | 771.5556 |

| 204 | standing | incongruent | 895.0345 |

| 204 | standing | congruent | 767.9375 |

| 205 | standing | incongruent | 856.3333 |

| 205 | standing | congruent | 792.0000 |

| 206 | standing | incongruent | 907.2903 |

| 206 | standing | congruent | 858.3636 |

| 207 | standing | incongruent | 868.2188 |

| 207 | standing | congruent | 816.9722 |

| 208 | standing | incongruent | 780.1176 |

| 208 | standing | congruent | 682.0000 |

| 209 | standing | incongruent | 858.9706 |

| 209 | standing | congruent | 833.9394 |

| 210 | standing | incongruent | 934.1905 |

| 210 | standing | congruent | 909.6071 |

| 212 | standing | incongruent | 802.3030 |

| 212 | standing | congruent | 723.4545 |

| 214 | standing | incongruent | 883.6957 |

| 214 | standing | congruent | 804.3714 |

| 215 | standing | incongruent | 897.5312 |

| 215 | standing | congruent | 709.7647 |

| 216 | standing | incongruent | 711.2424 |

| 216 | standing | congruent | 624.0882 |

| 217 | standing | incongruent | 840.7333 |

| 217 | standing | congruent | 703.8056 |

| 218 | standing | incongruent | 901.6875 |

| 218 | standing | congruent | 807.5806 |

| 219 | standing | incongruent | 860.8421 |

| 219 | standing | congruent | 827.9600 |

Objectives

Today’s lab’s objectives are to:

- Learn about factorial ANOVA

- Learn how to conduct a factorial ANOVA in Jamovi

- Learn about running chi-squared tests in Jamovi

You’ll turn in an “answer sheet” on Brightspace. Please turn that in by the end of the weekend.

Factorial ANOVA

We’ll start with an example via Matthew Crump for this, based on data from Rosenbaum, Mama, & Algom (2017):

Rosenbaum, D., Mama, Y., & Algom, D. (2017). Stand by your Stroop: Standing up enhances selective attention and cognitive control. Psychological Science, 28(12), 1864–1867. https://doi.org/10.1177/0956797617721270

The paper asked the kind of odd question of whether standing up vs. sitting down influenced attention. They used the Stroop task—which you may have learned about in your classes—naming words based on the color of the letters rather than their content, which is easier when the word is the same color (e.g., red—congruent) and harder when different (e.g., red—incongruent). So we have a \(2\times{}2\) design, or a two-way factorial ANOVA. Factors are position (standing vs. sitting) and condition (congruent vs. incongruent). They had participants do a congruent Stroop while sitting and then do the incongruent Stroop. They also had folks do it while standing. Throughout, they measured how long it took for people to respond (reaction time—RT).

The data from experiment 1 follows:

Download the data from here or on Brightspace (called “exp.csv”), and open it in Jamovi.

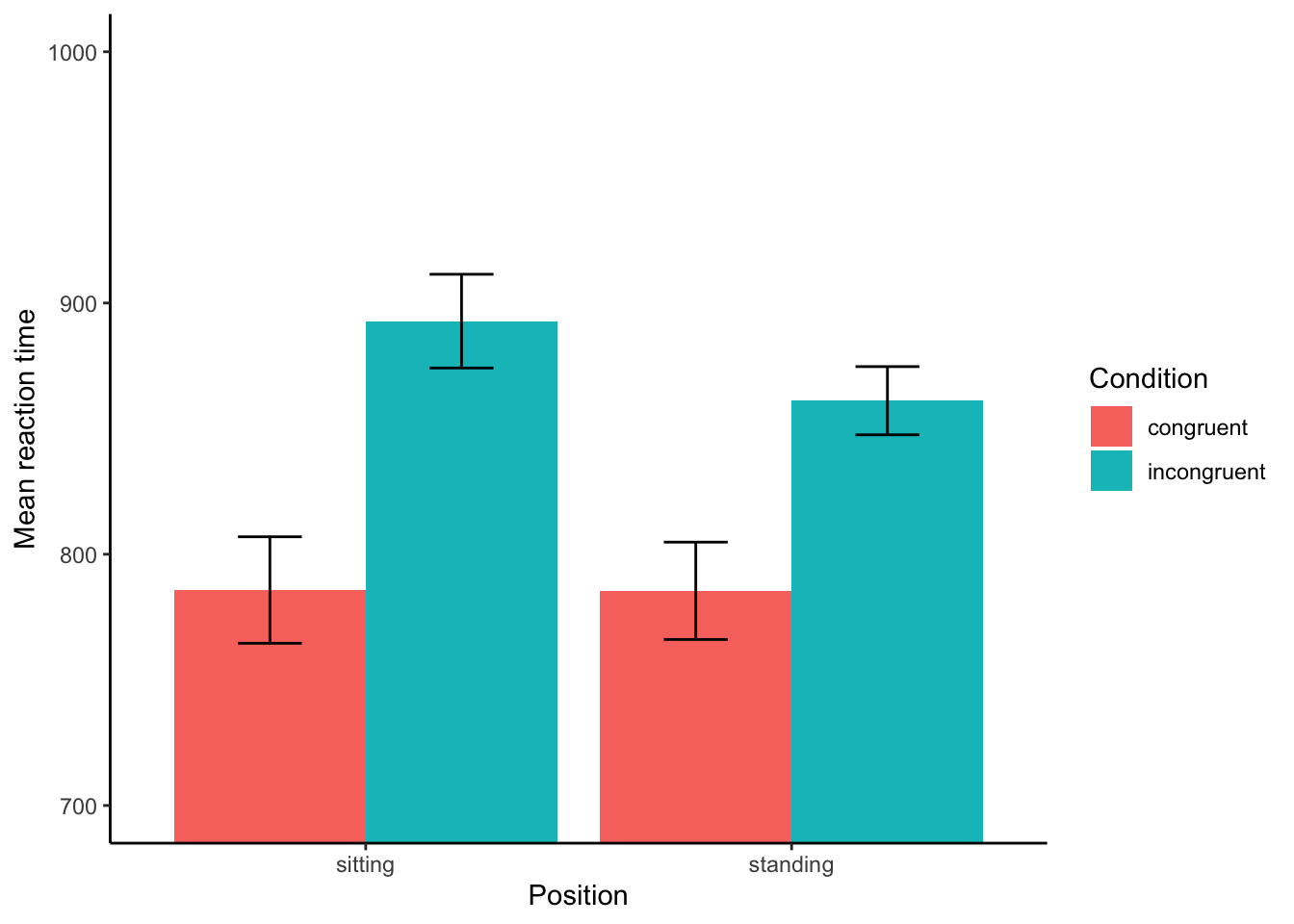

- Using the Descriptives plot menu in Jamovi, plot the four RT means broken up by condition (sitting/congruent; sitting/incongruent; standing/congruent; standing/incongruent). (You’ll want to have

rtin Variables andconditionandpositionin “Split By”.) Report this plot as #1 on your answer sheet.

You could also get the means and SEs from Descriptives, and plot the means and SEs in Google Sheets or Excel, much like we did in Lab 3.

Is it clear here whether there’s a difference between conditions? You may find that a box plot shows that better (in Jamovi), or in Google Sheets/Excel, you can change the y-axis to zoom in.

Below, you’ll see the plot I’ve created where I’m zooming to the RT between 700 and 1000ms. (I’ve also added the error bars.)

When we zoom in, it actually looks like there’s a difference. At least, there’s a main effect of condition, for sure—incongruent trials are responded to more slowly than normal. That would be a t-test, right? But is there a main effect of position? Probably not, perhaps? And is there an interaction? Well, that’s the question!

There’s more to the experiment in the original, but for us we can just conduct a factorial ANOVA.

Go to Jamovi and run the ANOVA. Put rt in the dependent variable section, and the other two terms we’re interested in (not id) in the Fixed Factors section. (Remember to not go to “one-way” ANOVA but just plain old ANOVA.)

Check the checkbox to also get the \(\eta^2\) (effect size). Your table should look quite similar to this:

| Sum of Squares | df | Mean Square | F | p | η2 | |

|---|---|---|---|---|---|---|

| position | 4348 | 1 | 4348 | 0.754 | 0.388 | 0.008 |

| condition | 141841 | 1 | 141841 | 24.600 | < .001 | 0.273 |

| position * condition | 4180 | 1 | 4180 | 0.725 | 0.398 | 0.008 |

| Residuals | 369011 | 64 | 5766 | — | — | — |

Spend some time trying to make sense of this. Which effects are statistically significant? Which have a meaningful effect size? Remember to start with the interaction. Then click through to confirm.

There is no significant interaction, \(F(1, 64)=0.73,p=.40\); there was no interaction between condition and positiion on the RT.

There is a main effect of condition, as we could probably tell from the plot. You can write it up using the df from above and the F and p-values: \(F(1, 64)=24.6,p<.05,\eta^2=0.27\); participants were slower to respond on incongruent trials. This effect is rather large. This implies that people responded differently in the congruent from incongruent condition. Good! We would expect to see that.

| condition | Mean RT | SD of RT |

|---|---|---|

| congruent | 785.6041 | 82.43226 |

| incongruent | 876.9473 | 68.15789 |

Yes, looks like there is a much slower reaction time (RT) to incongruent trials. They’re harder! This happens across conditions; you’ll see that if you look at your graph.

There’s no effect of position, though, \(F(1, 64)=0.75,p=.39\); participants didn’t respond more slowly when sitting or standing.

| position | Mean RT | SD of RT |

|---|---|---|

| sitting | 839.2722 | 97.57685 |

| standing | 823.2791 | 78.01138 |

And this makes sense, given that mean RT for the two positions are slightly different, but SD is large!

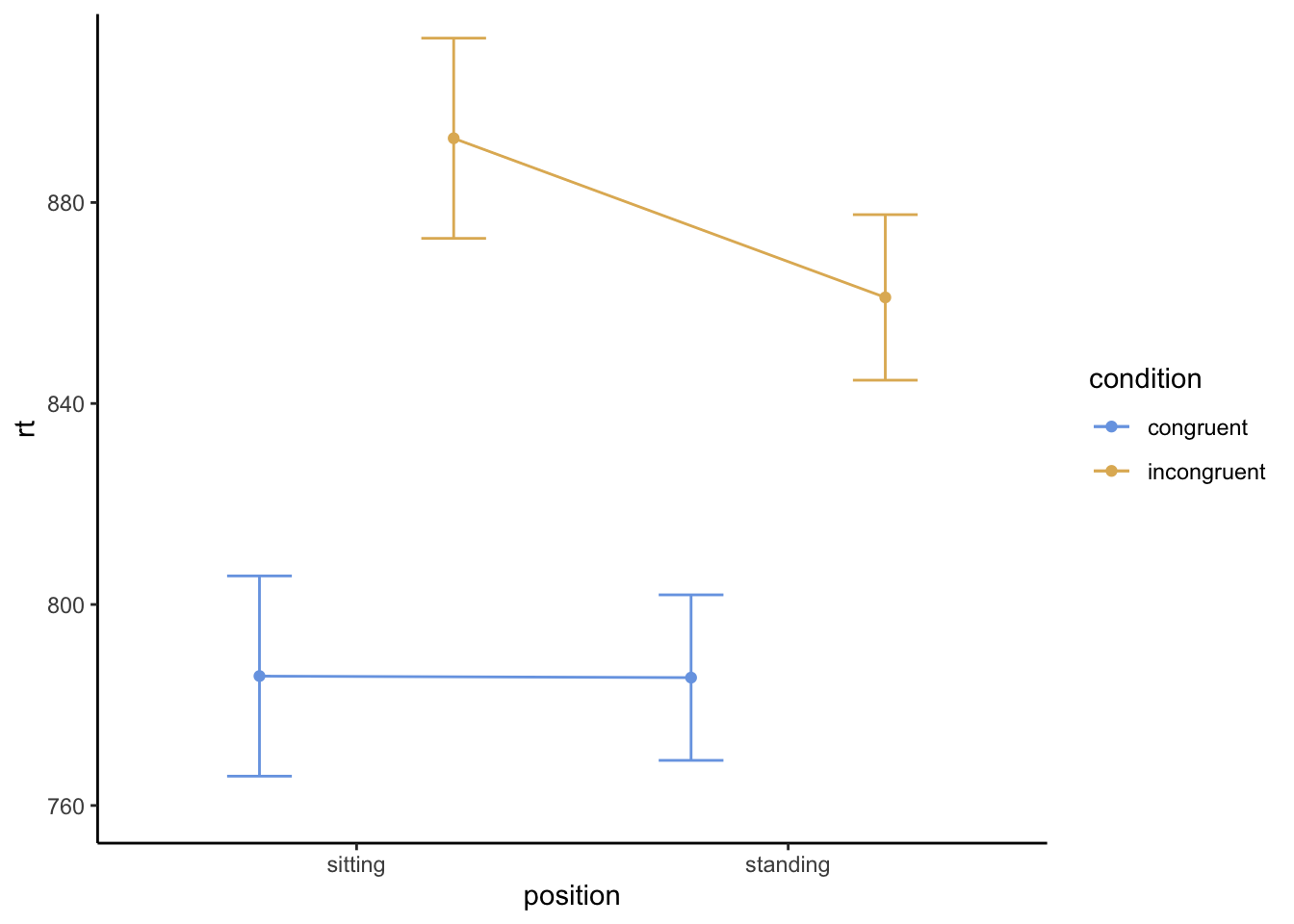

In Jamovi, go back to your ANOVA menu, and scroll down to “Estimated Marginal Means.” Put both condition and position under where it says Term 1. Under Output, check the checkbox for “Marginal means plots” and “Marginal means tables.” Switch error bars to show standard error.

You should see a table that’s very similar to the one we saw in Descriptives, and like the one you might have made in Google Sheets or Excel. The means should be identical; the SE should be the average of all four SEs you found. They’ve also shown you 95% confidence intervals.

The plot, though, is a little different: it’s just showing the means and error bars in lines and points, not a bar plot. It should look rather like this (note that if you put them in the reverse order, the plot will flip the factors; that would be fine, but will look different):

Does this plot provide different information from yours? More or less? My general sense: it’s about the same!

Interaction? What interaction?!

Because your interaction was non-significant, you could consider re-running the ANOVA without it. We’ve no real reason to suppose that we should do that here—our hypothesis involved it, I think!—but let’s learn to do it anyway. In Jamovi, scroll back up under the ANOVA to “Model”, and remove “condition * position” from the right side.

You’ll see that everything changes! The plot changes a bit (it’s using estimates from the model rather than the real data, now), and the ANOVA table looks like this:

| Sum of Squares | df | Mean Square | F | p | η2 | |

|---|---|---|---|---|---|---|

| condition | 141841 | 1 | 141841 | 24.705 | < .001 | 0.273 |

| position | 4348 | 1 | 4348 | 0.757 | 0.387 | 0.008 |

| Residuals | 373191 | 65 | 5741 | — | — | — |

What’s changed? Why do you think it has changed?

Removing the interaction means that the model changed a little bit. Since the interaction wasn’t significant—it wasn’t adding much to the model!—the values haven’t shifted much. Since the \(MS_{within}\) (under Residuals) hasn’t changed much, the \(F\) values don’t change much either. (And therefore, neither have the \(p\)s.)

The plot actually has changed more, though. This is because it’s using “estimated marginal means” based on the model, rather than the actual means we were calculating.

Assumptions Made

We also have been discussing our assumptions in class. In Jamovi, you can click on “Assumption Checks” and run tests of the assumption of normality and homogeneity of variance. Re-run the model with position * condition included, then add the assumption checks.

You’ll see Levene’s test for homogeneity of variance, which we can report as \(F(3, 64)=1.39,p=.25\). If this were significant, it would mean we were violating our assumption that variance is similar between groups. Since it’s not, we can continue with this assumption.

You’ll also see the Shapiro-Wilk test for normality. Again, the p-value is non-significant (\(p=.37\)) which means that our assumption is not violated. The residuals are (relatively) normal. You can also see that in the Q-Q plot.

In principle, if these were significant, we’d need to make some sort of correction.

Chi-square tests

A chi-square[d] test (\(\chi^2\)) asks whether observed frequencies fit an expected pattern. (“Observed” [what you’ve got] vs. “expected” [what you expected] frequencies.)

In the goodness of fit test, we compare how well a single nominal variable’s distribution fits an expected distribution of that variable.

In the chi-squared test of independence, we test hypotheses about the relationship between two nominal variables, comparing observed frequencies to the frequencies we would expect if there were no relationship.

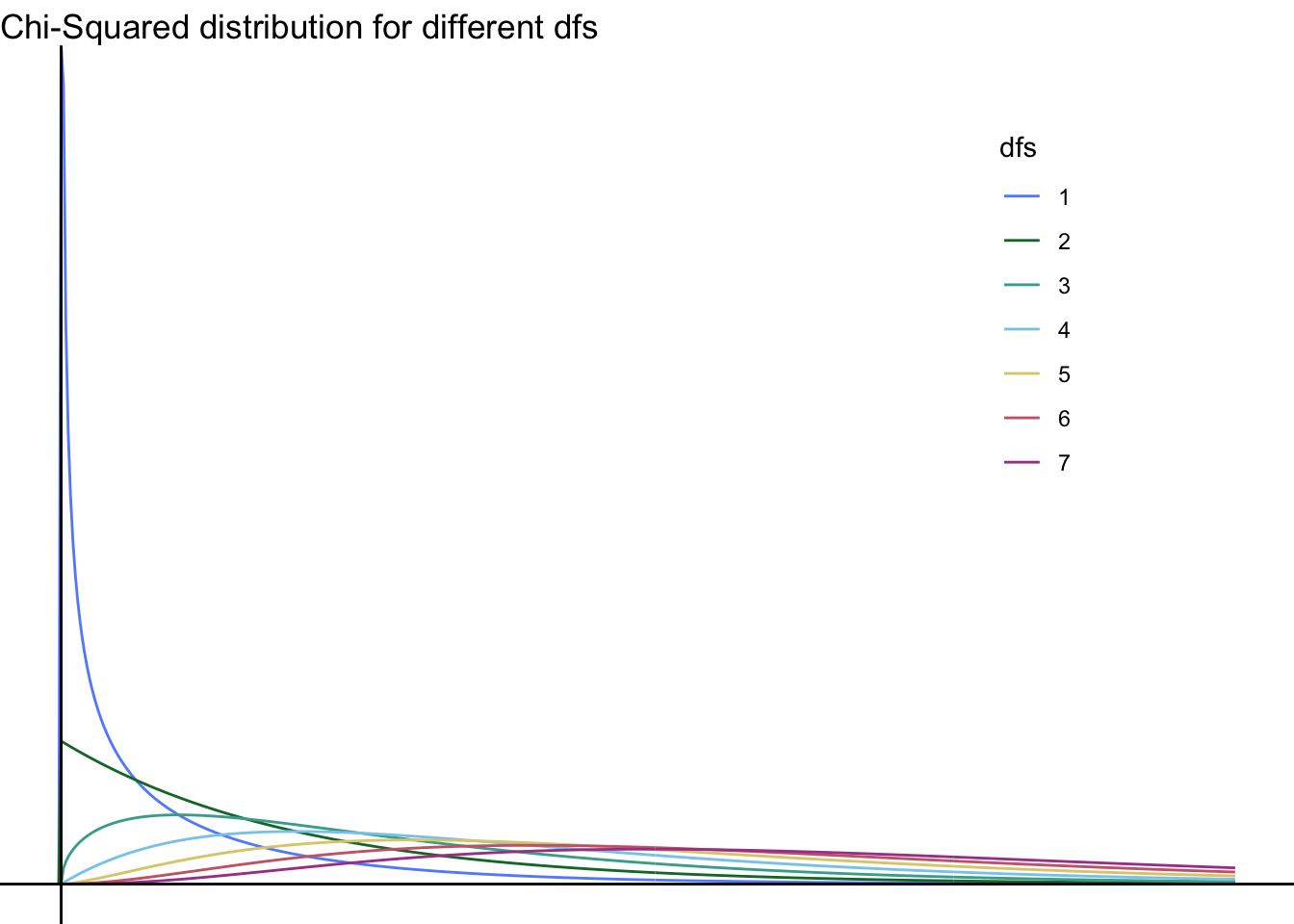

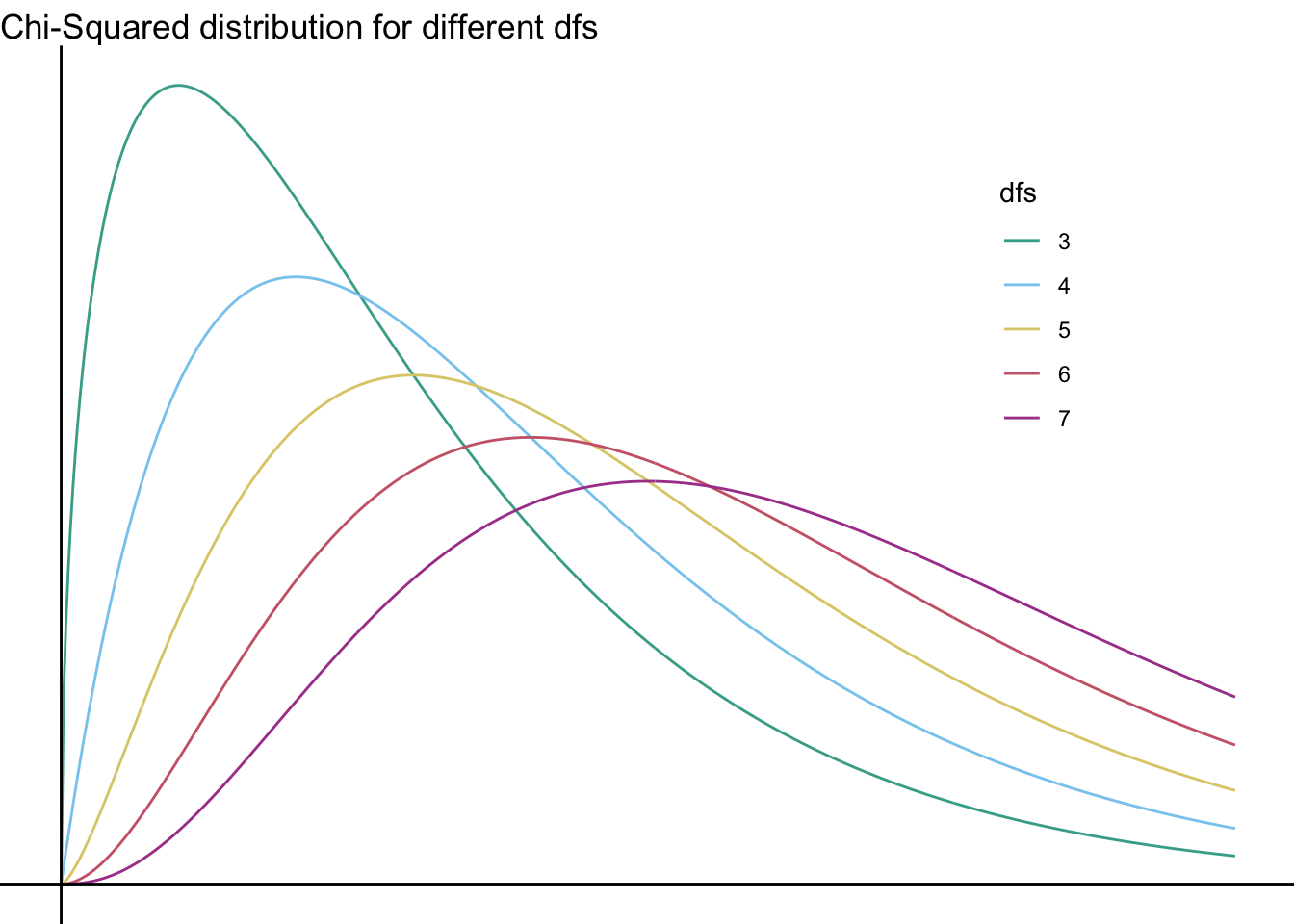

The test is based on the chi-squared distribution, which we’ve discussed in class.

The distribution

The chi-square distribution looks different at different degrees of freedom—take a look:

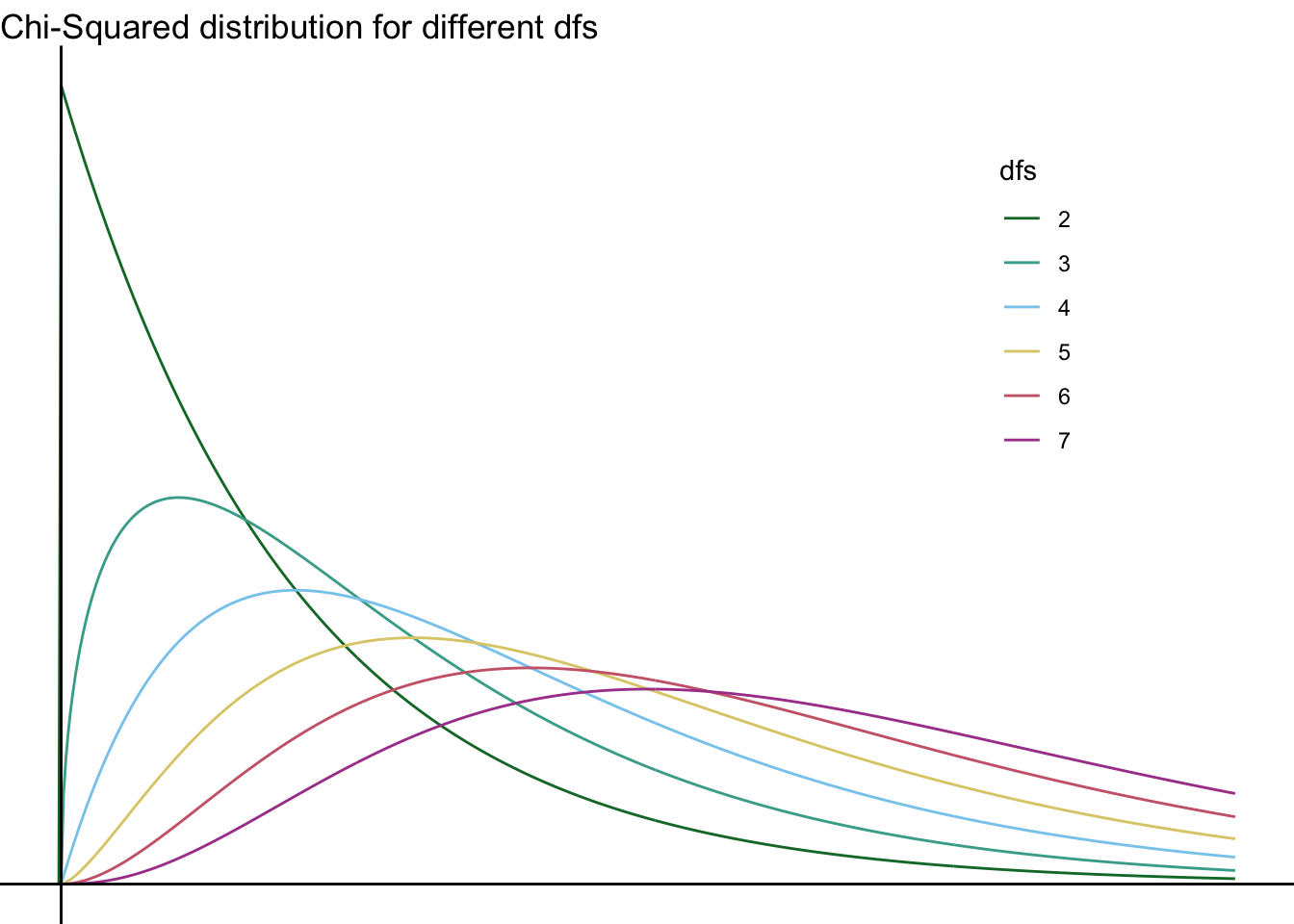

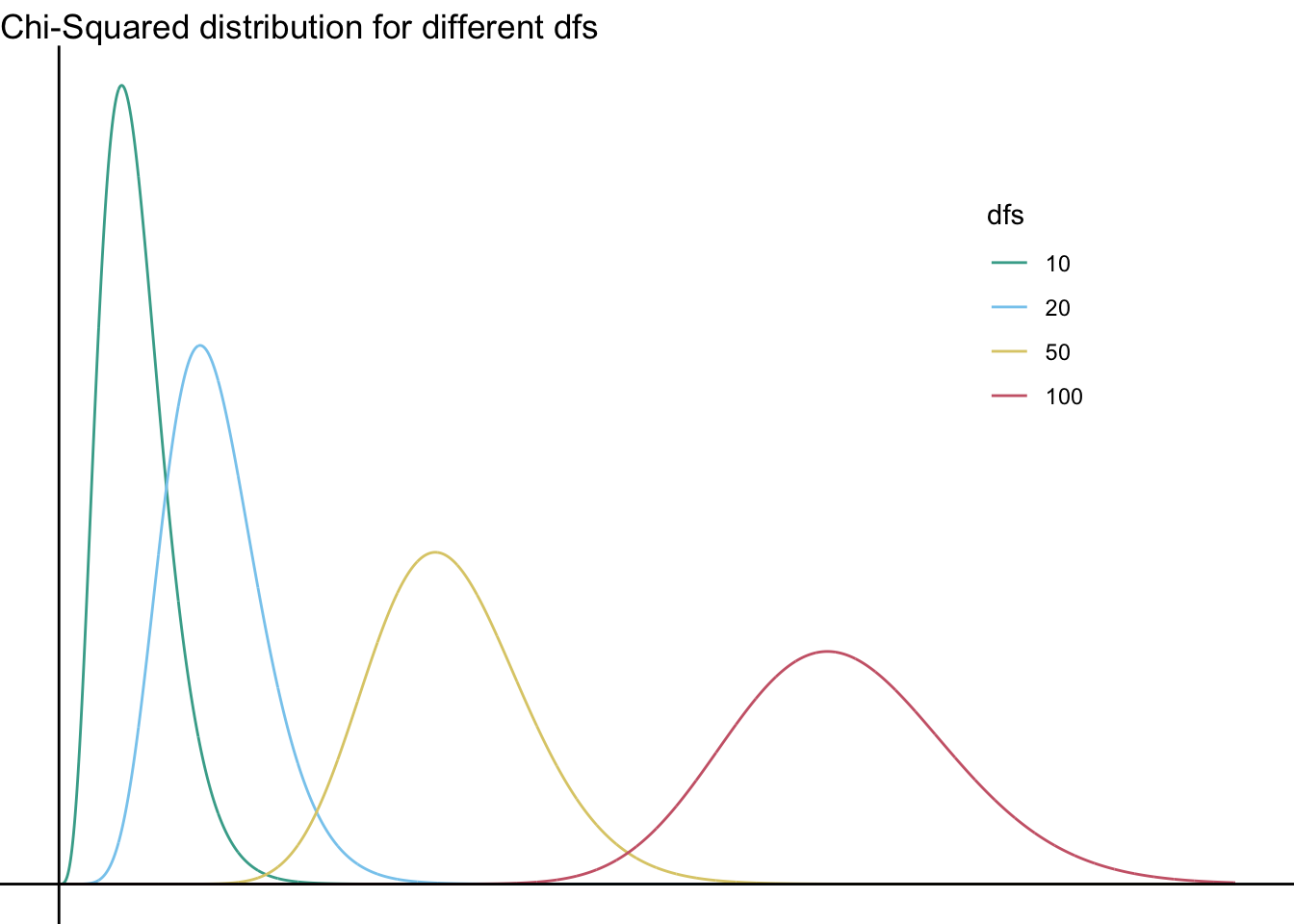

As you can see, the \(\chi^2\) distribution with \(\mathit{df}=1\) is rather different from the others; here—take a look without it:

They continue to differ even without \(\mathit{df}=2\):

As you can see, none of these distributions looks normal… you can’t (and should not!) run a useful chi-squared test with 100 categories, but if you could it would finally start to look sort of normal—just shifted to the right:

(And as we discussed in class, the cut-off for significance of that test with \(df=100\) would be… absurd. It’s very high: \(\chi^2_{crit}(100)124.3\)… For comparison, recall that the cutoff for \(df=1\) is 3.84.)

Using the chi-squared test in Jamovi

The chi-squared tests in Jamovi are under the menu Frequencies.

First, the goodness of fit test

The function can help you determine whether there are the same number of participants who fall into the same boxes across conditions. (e.g., are the same number of participants selecting different gender options?)

In this kind of test, the null hypothesis is that there are the same number of participants in each group—i.e., that there are no differences between conditions. (Put another way, it’s the same percentage likelihood [probability] that any box will be chosen.) The chi-squared test for goodness of fit determines whether it is unlikely that the data comes from the null distribution.

For example, imagine that there are 100 participants who all give you the type of pet they have, and you get the following data. The null hypothesis is that (of the four pet types) there is a 25% chance that any given participant would choose them. The research hypothesis is that this is not the case—some pets are more likely to be owned by participants.

I’ve created some fake data—the below imagines that of the folks polled, 40 have cats, 55 have dogs, 3 have parakeets, and somehow 2 have pot-belly pigs:

| participant_id | pet |

|---|---|

| 1 | cats |

| 2 | cats |

| 3 | cats |

| 4 | cats |

| 5 | cats |

| 6 | cats |

| 7 | cats |

| 8 | cats |

| 9 | cats |

| 10 | cats |

| 11 | cats |

| 12 | cats |

| 13 | cats |

| 14 | cats |

| 15 | cats |

| 16 | cats |

| 17 | cats |

| 18 | cats |

| 19 | cats |

| 20 | cats |

| 21 | cats |

| 22 | cats |

| 23 | cats |

| 24 | cats |

| 25 | cats |

| 26 | cats |

| 27 | cats |

| 28 | cats |

| 29 | cats |

| 30 | cats |

| 31 | cats |

| 32 | cats |

| 33 | cats |

| 34 | cats |

| 35 | cats |

| 36 | cats |

| 37 | cats |

| 38 | cats |

| 39 | cats |

| 40 | cats |

| 41 | dogs |

| 42 | dogs |

| 43 | dogs |

| 44 | dogs |

| 45 | dogs |

| 46 | dogs |

| 47 | dogs |

| 48 | dogs |

| 49 | dogs |

| 50 | dogs |

| 51 | dogs |

| 52 | dogs |

| 53 | dogs |

| 54 | dogs |

| 55 | dogs |

| 56 | dogs |

| 57 | dogs |

| 58 | dogs |

| 59 | dogs |

| 60 | dogs |

| 61 | dogs |

| 62 | dogs |

| 63 | dogs |

| 64 | dogs |

| 65 | dogs |

| 66 | dogs |

| 67 | dogs |

| 68 | dogs |

| 69 | dogs |

| 70 | dogs |

| 71 | dogs |

| 72 | dogs |

| 73 | dogs |

| 74 | dogs |

| 75 | dogs |

| 76 | dogs |

| 77 | dogs |

| 78 | dogs |

| 79 | dogs |

| 80 | dogs |

| 81 | dogs |

| 82 | dogs |

| 83 | dogs |

| 84 | dogs |

| 85 | dogs |

| 86 | dogs |

| 87 | dogs |

| 88 | dogs |

| 89 | dogs |

| 90 | dogs |

| 91 | dogs |

| 92 | dogs |

| 93 | dogs |

| 94 | dogs |

| 95 | dogs |

| 96 | parakeets |

| 97 | parakeets |

| 98 | parakeets |

| 99 | potbellypigs |

| 100 | potbellypigs |

Now, as you see with the data above, this is just a string of animals with “id” numbers. It’s essentially nominal (categorical) data. But the chi-square test doesn’t care about participant ID at all.

If we want to run the goodness-of-fit test by hand, we need a summary table of this data. Just the counts! Something like this:

| pet | count |

|---|---|

| cats | 40 |

| dogs | 55 |

| parakeets | 3 |

| potbellypigs | 2 |

This is still nominal data. It’s just tabulated now—the numbers we’re seeing are the counts of how many people responded to each. Regardless, it sure seems like those are uneven counts. So: suppose we want to use a chi-squared test to determine whether there’s a statistically-significant difference. We can do this in two ways in Jamovi. Let’s start with that tabulated data.

In Jamovi, open a blank data set. (Close any data you have now, or go to the hamburger menu and click New.) Label one variable pet, and enter the names as you see above. Label the second variable n, or count, and enter the counts.

Then go to Analyses: Frequencies: N Outcomes (\(\chi^2\) Goodness of fit). Put pet in the Variable, and include count (or n) as the Counts. The output should be telling you: yes, there are differences in the groups! The null hypothesis can be rejected. You can write this up as follows:

There is a difference between expected and observed results in the types of pets participants had, \(\chi^2(3)=85.5, p<.05\).

Remember what I said about probabilities above? The chi-squared test determines whether it is unlikely that the data comes from a distribution of probabilities [likelihoods].

We could explicitly articulate our null hypothesis by saying that we anticipate all probabilities to be the same. Working with the same test in Jamovi, click the Expected Probabilities dropdown. Make sure the Ratio listed is 1 for all levels. (The Proportion will therefore be .25 for each.)

This is just doing the background of the chi-squared formula for you:

\[\chi^2=\sum{\frac{(O-E)^2}{E}}\]

So given the numbers of 40, 55, 3, and 2—the observed values—and the “expected” values if there were 25% response for each animal (in fact: 25, 25, 25, 25)—this is doing the calculation:

\[\chi^2=\sum{\frac{(O-E)^2}{E}}=\frac{(40-25)^2}{25}+\frac{(55-25)^2}{25}+\frac{(3-25)^2}{25}+\frac{(2-25)^2}{25}\] \[=9+36+19.36+21.16=85.52\]

… which, yes, is the same thing our test got us in Jamovi.

The interesting thing about the probabilities is that we can also suggest, e.g., that we anticipate something other than even likelihoods. For example, suppose I thought that 45% of participants would choose dogs, 45% would choose cats, 5% would choose parakeets, and 5% would have pot-bellied pigs. That’s the new null hypothesis—we are asking “are those probabilities representative of what we found?”. What does that look like in Jamovi?

Well, we just change the probabilities from 25% each to the new ones. Jamovi wants ratios; you could put them in as 45, 45, 5, 5, or as 9, 9, 1, 1 (i.e., the simplified fraction), or some other way. Try it out in Jamovi.

They are no longer statistically significant. This means that now we no longer reject the null that the true counts match what we’d expect to see.

We can say that there is no difference between expected and observed results in the types of pets participants had, \(\chi^2(3)=5.3, p=.15\).

Thus, with this null hypothesis, our findings are just about what we expected! Yeah, we’d expect most people to have cats and dogs.

It might also be that case that we’d actually expect 0% pot-bellied pigs, though, even though we do live near a lot of farms. You can put in a 0 to the ratio, but it doesn’t make “sense” conceptually, even though Jamovi will give you a response.

You divide by the expected value in the chi-square test. Dividing by 0 is not possible!

In fact, one of the assumptions of the chi-squared test is that the expected value in each cell is greater than 5. And 0% isn’t going to result in an expected value of anything other than 0!

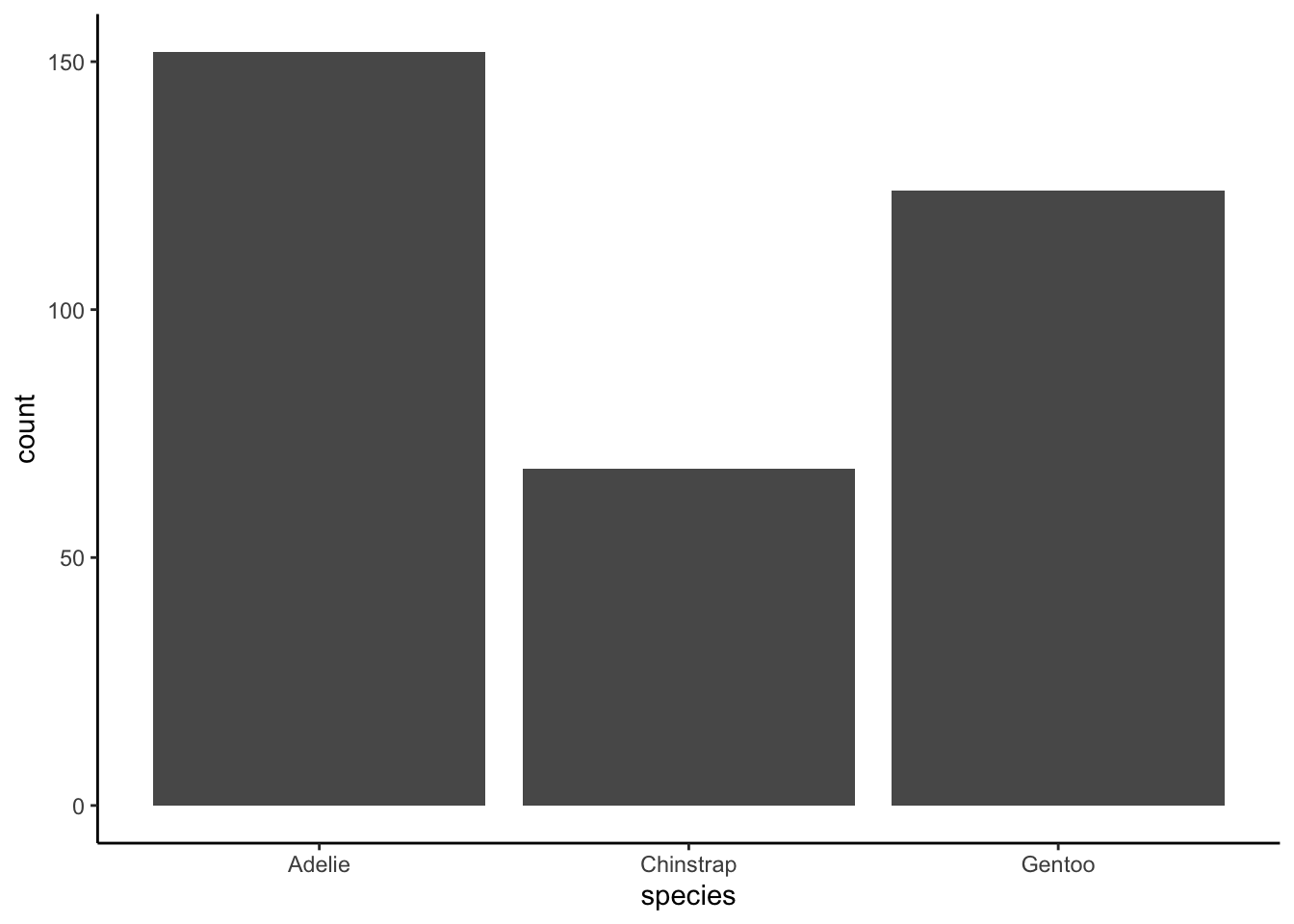

Let’s look at another example of the chi-squared test for goodness of fit, using the penguins data again. You can still find it on Brightspace (or on your computer, or download it here), and open it in Jamovi.

Suppose we wanted to know if the numbers of penguins of each species in the study were significantly different from one another.

| species | count |

|---|---|

| Adelie | 152 |

| Chinstrap | 68 |

| Gentoo | 124 |

Seems like a substantial difference, no? Well, is it?

This time, rather than adding the counts, just put species in the Variable field. Jamovi will automatically tabulate them for you—you should see the same numbers as above. Determine if those are different numbers per group.

Definitely, yes! Your results should show significant differences from the expected “even” distribution, \(\chi^2(2)=31.9, p<.05\).

As a note: you could plot these, just as you can plot any categorical data. As you may recall from the lecture on visualizations, I recommend a sorted frequency bar plot if you have more than five categories. For penguin species, it’s probably no help to sort. Something like the following would be fine:

Okay! Hopefully you’re feeling like you get what the chi-square test for goodness of fit looks like!

Let’s turn to…

Chi-square test for independence

In the test of independence, we test hypotheses about the relationship between two nominal variables, comparing observed frequencies to the frequencies we would expect if there were no relationship.

Let’s use a data-set called color:

| ColorPref | Personality |

|---|---|

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Introvert |

| Green | Extravert |

| Green | Extravert |

| Green | Extravert |

| Green | Extravert |

| Green | Extravert |

| Green | Extravert |

| Yellow | Extravert |

| Yellow | Extravert |

| Yellow | Extravert |

| Yellow | Introvert |

| Yellow | Introvert |

| Yellow | Introvert |

| Yellow | Introvert |

| Yellow | Introvert |

| Yellow | Introvert |

| Yellow | Introvert |

| Yellow | Extravert |

| Yellow | Extravert |

| Red | Extravert |

| Red | Extravert |

| Red | Extravert |

| Red | Extravert |

| Red | Extravert |

| Red | Extravert |

| Red | Extravert |

| Red | Extravert |

| Red | Extravert |

| Red | Extravert |

| Red | Introvert |

| Red | Introvert |

| Red | Introvert |

| Red | Introvert |

| Red | Extravert |

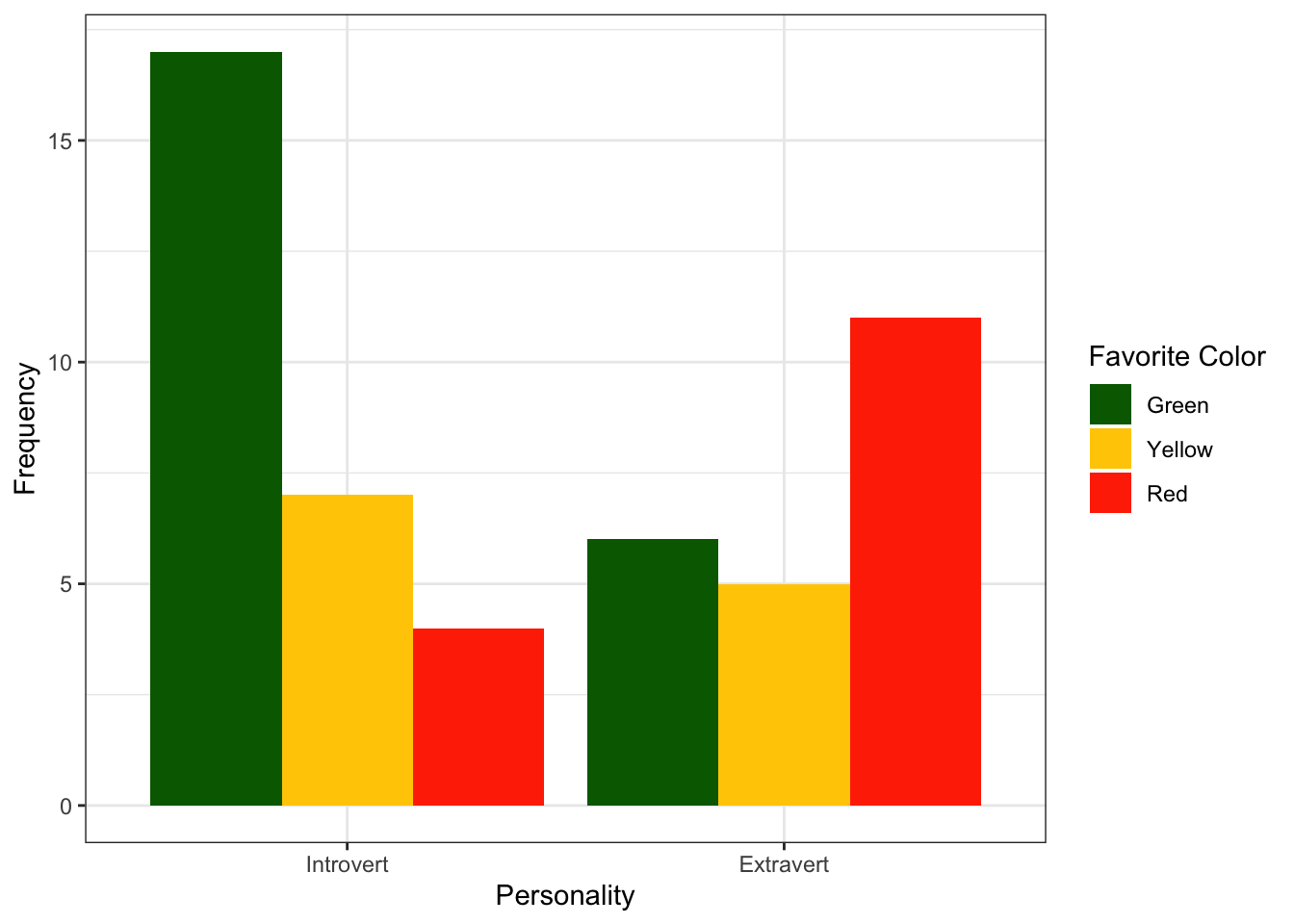

Take a look above. Imagine that the “research question” is: does personality type (Personality) affect favorite color (ColorPref)?

Again, we can get a table of these—looks similar to the cells in a factorial ANOVA, huh? This is called a contingency table. It shows counts organized by cells.

| Green | Yellow | Red | |

|---|---|---|---|

| Introvert | 17 | 7 | 4 |

| Extravert | 6 | 5 | 11 |

From this table, you should see that we might ask a question like “do introverts have a different level of liking for these colors than extraverts?” Again, what we have here are categorical variables—but we can ask a question about who falls into which category. (The numbers here are counts based on those categorical variables.)

Before we go further, let’s try plotting these cells out. Much like with a factorial ANOVA, the plot is often the best way of figuring something like this out. Looking at the plot below, do you think that there is a difference in counts between personalities in this (made up) sample? What is your hypothesis based on the plot?

Don’t worry; you can make a table quite like this in Jamovi. (You could make it in Excel or Google Sheets, too, but I won’t force you.) Copy the data from the table above (not including the header line that says ColorPref Personality). Make a new dataset in Jamovi. Paste the data in, and then label the variables ColorPref and Personality. Go to Analyses: Frequencies: Independent Samples (\(\chi^2\) test of association). Put Personality in Rows and put ColorPref in Columns. Then, scroll down to Plots and check Bar Plot.

Your plot should match this in all but color. (You can also flip the axes under where it says “X-Axis”.)

You can also enter the contingency table into Jamovi. Clear the data again (hamburger menu: New). Label your three variables Personality, ColorPref, and n. Then enter the data as follows (copy this or enter it manually):

| Personality | ColorPref | n |

|---|---|---|

| Introvert | Green | 17 |

| Introvert | Yellow | 7 |

| Introvert | Red | 4 |

| Extravert | Green | 6 |

| Extravert | Yellow | 5 |

| Extravert | Red | 11 |

You should be able to make the same plot, this time by adding the n column as your counts.

Okay, let’s look at the results of the chi-squared test. No, these are not independent variables. We can write this up as follows:

Groups were not independent—there was a link between personality and color, \(\chi^2(2)=8.26,p<.05\).

Fortunately, the mechanics of running this kind of test in Jamovi aren’t any different from running a chi-squared test of independence!

Some goodness of fit practice

Imagine that we get some data on rolling a six-sided die 150 times. You get 150 numbers between 1 and 6, representing your throwing a die over and over again. I’ve made that fake data and tabulated it for you:

| rolls | count |

|---|---|

| 1 | 39 |

| 2 | 23 |

| 3 | 18 |

| 4 | 12 |

| 5 | 19 |

| 6 | 39 |

Let’s say we want to run a chi-square test of goodness of fit on this table.

That the die is NOT fitting our expected values—we’re not seeing even choices of numbers 1-6 on this die. Maybe it’s a cheat or loaded die?

Run the chi-square test. Report the results on your answer sheet as #2 on your answer sheet.

An independence example

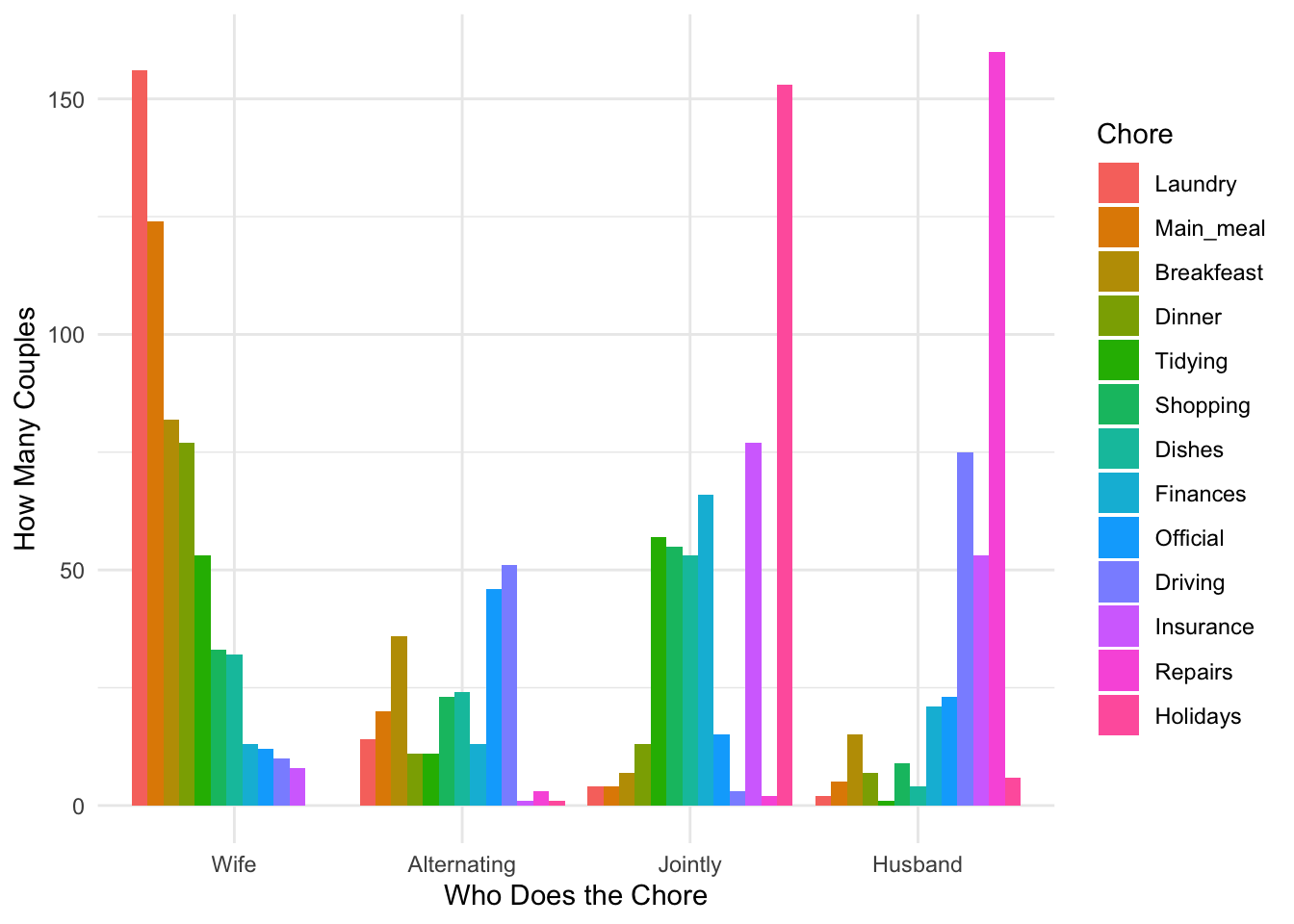

The data below is a “contingency table” which contains 13 house tasks and their distribution in heterosexual couples. Rows are the different tasks; values are the frequencies of the tasks done: by the wife only, by the husband only, by alternating partners, or together.

| Wife | Alternating | Husband | Jointly | |

|---|---|---|---|---|

| Laundry | 156 | 14 | 2 | 4 |

| Main_meal | 124 | 20 | 5 | 4 |

| Dinner | 77 | 11 | 7 | 13 |

| Breakfeast | 82 | 36 | 15 | 7 |

| Tidying | 53 | 11 | 1 | 57 |

| Dishes | 32 | 24 | 4 | 53 |

| Shopping | 33 | 23 | 9 | 55 |

| Official | 12 | 46 | 23 | 15 |

| Driving | 10 | 51 | 75 | 3 |

| Finances | 13 | 13 | 21 | 66 |

| Insurance | 8 | 1 | 53 | 77 |

| Repairs | 0 | 3 | 160 | 2 |

| Holidays | 0 | 1 | 6 | 153 |

| chore | who | number |

|---|---|---|

| Laundry | Wife | 156 |

| Laundry | Husband | 2 |

| Laundry | Alternating | 14 |

| Laundry | Jointly | 4 |

| Main_meal | Wife | 124 |

| Main_meal | Husband | 5 |

| Main_meal | Alternating | 20 |

| Main_meal | Jointly | 4 |

| Dinner | Wife | 77 |

| Dinner | Husband | 7 |

| Dinner | Alternating | 11 |

| Dinner | Jointly | 13 |

| Breakfeast | Wife | 82 |

| Breakfeast | Husband | 15 |

| Breakfeast | Alternating | 36 |

| Breakfeast | Jointly | 7 |

| Tidying | Wife | 53 |

| Tidying | Husband | 1 |

| Tidying | Alternating | 11 |

| Tidying | Jointly | 57 |

| Dishes | Wife | 32 |

| Dishes | Husband | 4 |

| Dishes | Alternating | 24 |

| Dishes | Jointly | 53 |

| Shopping | Wife | 33 |

| Shopping | Husband | 9 |

| Shopping | Alternating | 23 |

| Shopping | Jointly | 55 |

| Official | Wife | 12 |

| Official | Husband | 23 |

| Official | Alternating | 46 |

| Official | Jointly | 15 |

| Driving | Wife | 10 |

| Driving | Husband | 75 |

| Driving | Alternating | 51 |

| Driving | Jointly | 3 |

| Finances | Wife | 13 |

| Finances | Husband | 21 |

| Finances | Alternating | 13 |

| Finances | Jointly | 66 |

| Insurance | Wife | 8 |

| Insurance | Husband | 53 |

| Insurance | Alternating | 1 |

| Insurance | Jointly | 77 |

| Repairs | Wife | 0 |

| Repairs | Husband | 160 |

| Repairs | Alternating | 3 |

| Repairs | Jointly | 2 |

| Holidays | Wife | 0 |

| Holidays | Husband | 6 |

| Holidays | Alternating | 1 |

| Holidays | Jointly | 153 |

Look at the table and the plot, and make some informed guesses: in these data, are chores done evenly or unevenly?

Then use the second of the above tables (not the contingency table)—again, copy and paste into Jamovi; you may need to scroll down—to (a) recreate (as much as you can) the above graph and (b) test for independence. (This is actually a place where the stacked bar option might make sense?) Include the plot and the results as #3 on your answer sheet.

That’s the end of the lab! Feel free to meet with your group for the group project.

More Penguins (and Factorial ANOVAs)

If you’d like extra practice on factorial ANOVA, try this out with the penguin data again.

Once you have the data open, use a Filter to ignore the penguins for whom sex is not known (i.e., set sex != NA in the filter.

Please remember that because NA is a special designation (it means “not available” here), it doesn’t have quotes. But normally it’s only the variable names, or numbers, that don’t have quotation marks. If we were only trying to filter to male penguins, we’d write sex == "male".

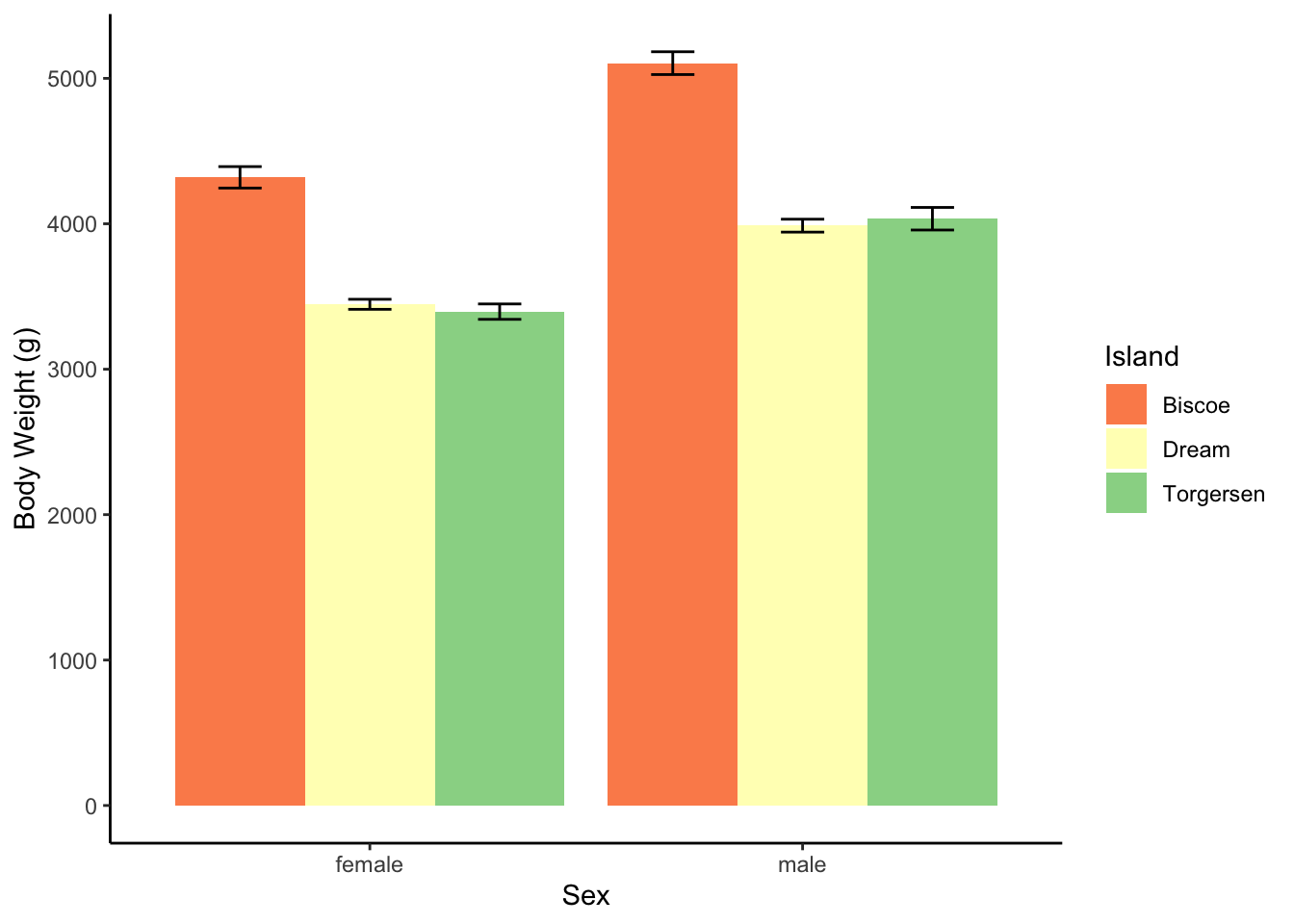

Use the ANOVA menu to answer whether there is an interaction between penguin sex and the island they live on in predicting body_mass_g. Then practice writing up the results. Once you’re done, click through to see my answer.

| Sum of Squares | df | Mean Square | F | p | |

|---|---|---|---|---|---|

| sex | 2.71 × 107 | 1 | 2.71 × 107 | 95.844 | < .001 |

| island | 8.25 × 107 | 2 | 4.12 × 107 | 145.575 | < .001 |

| sex * island | 1.06 × 106 | 2 | 5.32 × 105 | 1.877 | 0.155 |

| Residuals | 9.26 × 107 | 327 | 2.83 × 105 | — | — |

There was no interaction between sex and island, \(F(2, 327)=1.88,p=.16\), but there was a main effect of sex, \(F(1, 327)=95.84,p<.05\) and a main effect of island, \(F(2,327)=145.58,p<.05\).

| M | SD | n | sem | |

|---|---|---|---|---|

| female | ||||

| Biscoe | 4319.38 | 659.75 | 80 | 73.76 |

| Dream | 3446.31 | 269.52 | 61 | 34.51 |

| Torgersen | 3395.83 | 259.14 | 24 | 52.90 |

| male | ||||

| Biscoe | 5104.52 | 714.20 | 83 | 78.39 |

| Dream | 3987.10 | 349.52 | 62 | 44.39 |

| Torgersen | 4034.78 | 372.47 | 23 | 77.67 |

Get Jamovi to make you a plot as well. Which island has heavier penguins? Which sex is heavier?

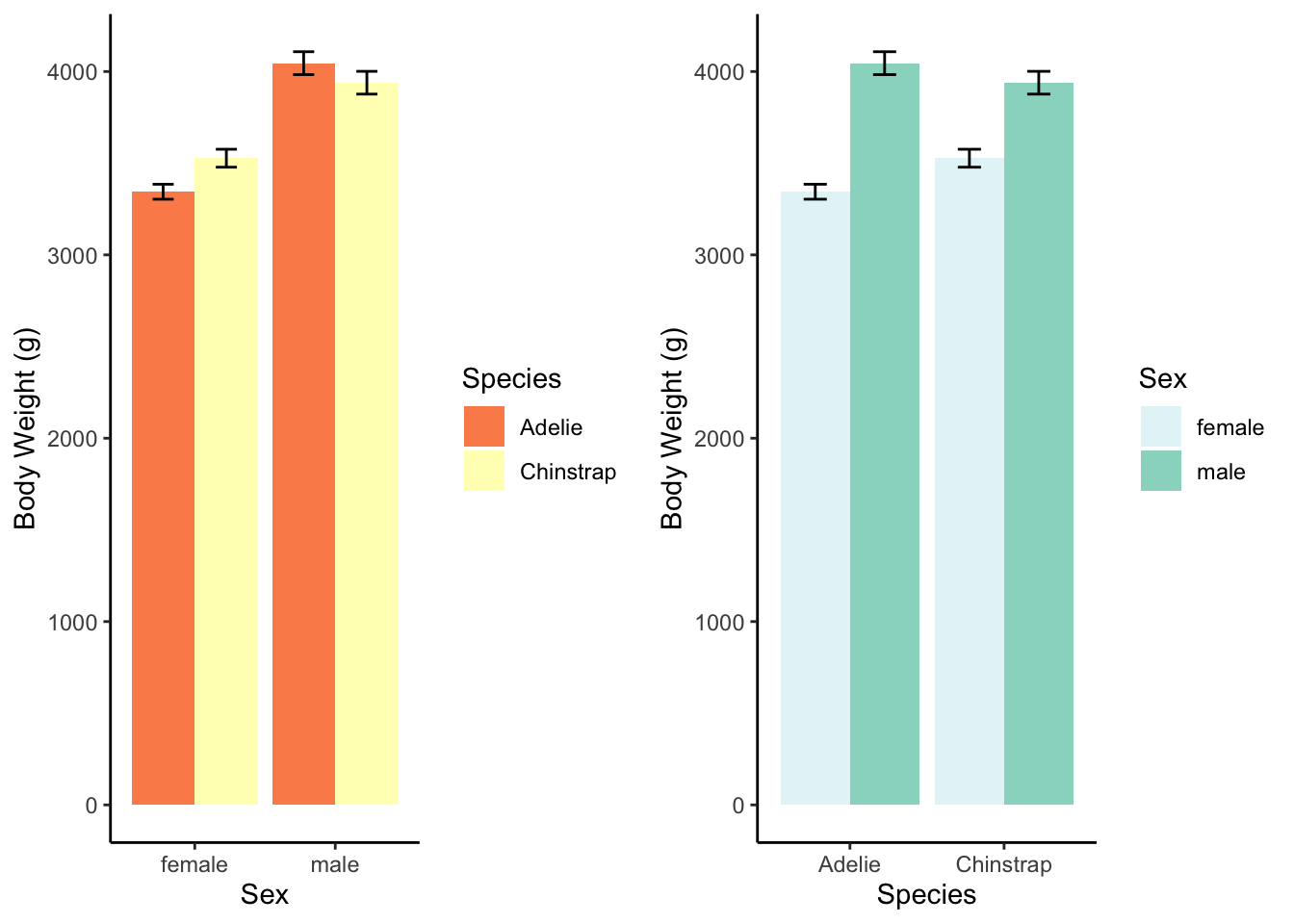

Dream penguins only

Filter to only penguins who lives on the island Dream. (Remember to use quotation marks.) Then run an ANOVA to determine whether body_mass_g is predicted by the interaction between sex and species on Dream.

Make a plot, and try to answer the questions about which sex/species is heavier. Is there an interaction? Practice writing up the results. Once you’re done, click through to see my answer.

| Sum of Squares | df | Mean Square | F | p | |

|---|---|---|---|---|---|

| sex | 9.41 × 106 | 1 | 9.41 × 106 | 100.604 | < .001 |

| species | 4.41 × 104 | 1 | 4.41 × 104 | 0.472 | 0.494 |

| sex * species | 6.36 × 105 | 1 | 6.36 × 105 | 6.800 | 0.01 |

| Residuals | 1.11 × 107 | 119 | 9.36 × 104 | — | — |

There was an interaction between sex and species, \(F(1, 119)=6.8,p<.05\). There was also a main effect of sex, \(F(1, 119)=100.6,p<.05\). There was no main effect of species, \(F(1,119)=0.47,p=.49\). (There were only two species on the Dream island.)

| M | SD | n | sem | |

|---|---|---|---|---|

| female | ||||

| Adelie | 3344.44 | 212.06 | 27 | 40.81 |

| Chinstrap | 3527.21 | 285.33 | 34 | 48.93 |

| male | ||||

| Adelie | 4045.54 | 330.55 | 28 | 62.47 |

| Chinstrap | 3938.97 | 362.14 | 34 | 62.11 |

The interaction, explained: Adelie males are heavier than Chinstrap males, but Adelie females are not as heavy as Chinstrap females. But the species are roughly similar in weight.

Reuse

Citation

@online{dainer-best2024,

author = {Dainer-Best, Justin},

title = {Factorial {ANOVA} and {Chi-Square} {(Lab} 11)},

date = {2024-04-25},

url = {https://faculty.bard.edu/jdainerbest/stats/labs//posts/11-factorial-anova-chi-sq},

langid = {en}

}